Backtester Interfaces - Part I

Position Sizing & Rebalancing

Table Of Contents

Recap

YAGNI

Rebalancing Our Positions

Rebalancing Desing

Rebalancing Implementation

Generating Forecasts Design

Generating Forecasts Interface

Forecast

assert()CallsMore

assert()CallsBundling

assert()CallsDecoupling Instruments, Rules, Features & Signals

Rebalancing

assert()CallsCurrent Rebalancing Implementation

Decomposing Rebalancing

Improving Rebalancing

Future Improvements

Recap

In our last article we took a closer look at some common OOP challenges and how we can use composition to better seperate concerns while also making the code more modular using our 2 data-sources .csv files and database as an example.

It turned out that the DataStore classes only job is to fetch data from its underlying store. It didn't need to know anything about further transformation or validity checks. That is the PriceReaders job! By generalizing the fetching mechanism away and injecting it into the PriceReader at runtime we inversed the dependencies and cleaned up the inheritance tree.

As a general rule of thumb, inheritance (extending other classes) is only a good idea if Class B is always also a Class A. Just because we fetch data with a DataStore doesn't mean we always want to do checks and transformations on it. So DataStores weren't necessarily always also a PriceReader.

YAGNI

Another one of these OOP buzzwords: YAGNI. The acronym YAGNI stands for You Ain't Gonna Need It. It is a principle out of the extreme programming (XP) spec and states that you shouldn't add functionaly until deemed necessary. Extreme programming has had a lot - and maybe even too much - influence on how modern programmers approach code bases. Even though it's kind of a controversial topic nowadays, sparking hot debates about the right and wrong way to do things, its core practices like Test-Driven Developent (TDD), Continuous Integration (CI/CD) or YAGNI are worth understanding and considering in my opinion.

With our last refactoring, we kind of broke YAGNI. We didn't actually need to read in data from a .csv file for our current strategy. Our database is working just fine. I generally dislike implementing features that don't get used yet because it usually introduces complexity. However, the DataSources were kind of the perfect easy example to demonstrate how composition can work and improve flexibility. It also helped us shed some more light on the refactoring process in general and I know that we're definitely going to use that feature in the future.

From now on, we're going to take things a step back though. New features (and their complexity) will only get introduced when we actually need them. I'll also try to keep the discussion about code-changes to a minimum and only go into detail about how and why I think certain implementations better suit the job at hand to shift the focus more towards the high-level POV again.

We're still going to talk about the design of things and what assert() calls might make sense to make our codebase more robust. This will enable us to later mix and match different implementations without much additional maintenance and debugging effort.

Rebalancing Our Positions

I think we've spent enough time on the data fetching for now. It's time to get going and tackle porting over the rest of our old backtester. Currently if we run our backtest_refactored.py, we get the error AttributeError: 'NoneType' object has no attribute 'shift' on raw_usd_pnl = price_series.diff() * rebalanced_positions.shift(1).

This is no no assert() error! We need to fix that.

It's no wonder we're running into this error because we didn't implement the logic for rebalancing yet. Just like with our calculate_strat_pre_cost_sr(), if we look at the code bits needed to calculate our rebalancing, we can see that we need a whole bunch of things:

[...]

for index, row in df.iterrows():

notional_per_contract = (row['close'] * 1 * contract_unit)

daily_usd_vol = notional_per_contract * row['instr_perc_returns_vol']

units_needed = daily_cash_risk / daily_usd_vol

forecast = row['capped_forecast']

ideal_pos_contracts = units_needed * forecast / 10

if df.index.get_loc(index) > 0:

prev_idx = df.index[df.index.get_loc(index) - 1]

current_pos_contracts = df.at[prev_idx, 'rebalanced_pos_contracts']

if np.isnan(current_pos_contracts):

current_pos_contracts = 0

contract_diff = ideal_pos_contracts - current_pos_contracts

contract_deviation = abs(contract_diff) / abs(ideal_pos_contracts) * 100

if contract_deviation > rebalance_err_threshold:

[...]

rebalanced_pos_contracts = ideal_pos_contracts

else:

[...]

rebalanced_pos_contracts = current_pos_contracts

df.at[index, 'rebalanced_pos_contracts'] = rebalanced_pos_contracts

Before we start copy-pasting over stuff from our old backtest.py let's quickly have a look at the design of things.

Rebalancing Design

The current design of rebalancing to an error threshold of 10% follows this article. It runs each day and looks something like this:

Specify annual risk target (VOL)

Calculate daily risk target (Cash)

Calculate ideal position size based on target & forecast (contracts)

Check how much current position deviates from ideal

Adjust current position if it deviates more than threshold

Each of these steps is articulated somewhat abstract and - just like in our last articles - merely signal the "top" for a specific branch of implementation logic to be followed down top-bottom. This is isually the case when talking design.

In pseudo-code it looks like this:

ann_vol_target = strategy.get_ann_vol_target()

daily_target_risk = calc_daily_ctarget(ann_vol_target)

signals = generate_signals(close_prices, EMA(8, 32))

ideal_positions = calc_ideal_positions(daily_target_risk, signals)

for ideal_pos in ideal_positions:

cur_pos = get_cur_pos()

deviation = calculate_deviation(cur_pos, ideal_pos)

if deviation > 10%:

cur_pos = ideal_pos

Since we didn't specify such a high-level interface before, we're going to copy-paste over the whole loop including all details for the sake of simplicity (easer to follow tutorial).

Rebalancing Implementation

def generate_rebalanced_positions(price_series, rebalance_threshold):

ann_perc_risk_target = 0.20

account_balance = 10_000

ann_cash_risk_target = account_balance * ann_perc_risk_target

contract_unit = 1

notional_exp_1_contract_series = 1 * price_series['close'] * contract_unit

daily_instr_perc_risk_series = price_series['close'].pct_change().ewm(span=35, min_periods=10).std()

daily_contract_risk_series = notional_exp_1_contract_series * daily_instr_perc_risk_series

trading_days_per_year = 365

daily_cash_risk_target = ann_cash_risk_target / np.sqrt(trading_days_per_year)

contracts_needed_series = daily_cash_risk_target / daily_contract_risk_series

forecast_series = generate_signals(price_series)

avg_forecast = 10

ideal_pos_series = contracts_needed_series * forecast_series / avg_forecast

rebalanced_pos_series = []

for i in range(len(ideal_pos_series)):

current_pos_contracts = ideal_pos_series[i-1]

if np.isnan(current_pos_contracts):

current_pos_contracts = 0

contract_diff = ideal_pos_series[i] - current_pos_contracts

deviation = abs(contract_diff) / abs(ideal_pos_series[i]) * 100

if deviation > rebalance_threshold:

rebalanced_pos_series.append(ideal_pos_series[i])

else:

rebalanced_pos_series.append(current_pos_contracts)

return rebalanced_pos_series

Again, this looks like quite a handful of things happening. Some of them are specific to the trading account and overall config, some of them are specific to instrument traded, etc. so they don't really belong here. We'll go through them step by step while refactoring.

What's more important to note is that for this calculation to work we need at least 2 things: a price series and a forecast series. Let's have a look at the forecast series generate_signals(price_series) first:

Generating Forecasts Design

The forecast/signal design follows this article and is rather simple:

Specify lookbacks for EMAC

Calculate EMAC series

Calculate raw forecast series (fast - slow)

Volatility normalize forecast series

Rescale forecasts to absolute average of 10

Cap forecasts to -20,20

Generating Forecasts Interface

def generate_signals(price_series):

fast_lookback = 2

slow_lookback = fast_lookback * 4

emac_fast = price_series['close'].ewm(span=fast_lookback, min_periods=fast_lookback).mean()

emac_slow = price_series['close'].ewm(span=slow_lookback, min_periods=slow_lookback).mean()

raw_forecast = emac_fast - emac_slow

instr_vol = price_series['close'].diff().ewm(span=35, min_periods=10).std()

vol_normalized_forecast = raw_forecast / instr_vol

trading_days_in_year = 365

abs_values = vol_normalized_forecast.abs().expanding(min_periods=trading_days_in_year * 2)

avg_abs_value = abs_values.median()

target_avg = 10.0

scaling_factor = target_avg / avg_abs_value

scaled_forecast = vol_normalized_forecast * scaling_factor

capped_forecast = scaled_forecast.clip(lower=-20, upper=20)

return capped_forecast

I can immediately see some duplication. And again some things that don't really belong here, for example the rescaling of forecasts is not really tied to any specific rule. We should decouple that. But before we do that lets think about some assert() calls first so we can be sure our changes don't break anything.

Forecast assert() calls

To check if our forecasts are behaving as they should, we can put the following minimum set of assert() calls in place:

signal_series = generate_signals(price_series)

# Test structural integrity

assert len(price_series) == len(signal_series), "Signal series length mismatch"

# Test Forecast Boundaries

assert signal_series.max() <= 20, "Forecast exceeds upper cap"

assert signal_series.min() >= -20, "Forecast exceeds lower cap"

# Test Aboslute Average

expected_abs_avg_forecast = 10.0

dev_threshold = 0.10

signal_abs_avg = signal_series.abs().median()

assert np.isclose(

signal_abs_avg,

expected_abs_avg_forecast,

rtol=dev_threshold

), f"""Forecast average deviates more than {dev_threshold} from target.

Expected: {expected_abs_avg_forecast},

Found: {signal_abs_avg}"""

This is about everything we want to know for our model right now. The asserts are trading rule agnostic. If we run it, our implementation seems to be already working!

More assert() calls

As already stated we want to decouple the trading rules from the rescaling of their signals. When rescaling we're only concerned with raw forecasts from whatever rule.

def generate_signals(trading_rule, price_series):

raw_forecast = trading_rule.get_raw_forecast(price_series['close'])

[...]

class EMAC(TradingRule):

def __init__(self, fast_lookback=2, slow_lookback=None):

self.fast_lookback = fast_lookback

self.slow_lookback = slow_lookback or fast_lookback * 4

def get_raw_forecast(self, data_series):

emac_fast = data_series.ewm(

span=self.fast_lookback,

min_periods=self.fast_lookback

).mean()

emac_slow = data_series.ewm(

span=self.slow_lookback,

min_periods=self.slow_lookback

).mean()

return emac_fast - emac_slow

fast_lookback = 8

slow_lookback = fast_lookback * 4

emac = EMAC(fast_lookback, slow_lookback)

signal_series = generate_signals(emac, price_series)

assert()

[...]

Now we can also assert() test our trading rule!

assert isinstance(raw_forecast, pd.Series)

assert len(raw_forecast) == len(price_series)

assert raw_forecast.index.equals(price_series.index)

assert not raw_forecast.isnull().all(), "Forecast should not be all NaN"

assert raw_forecast.isnull().sum() < len(raw_forecast), "Should have valid forecasts"

Note that this is not a finished list of tests but only some basic structural checks.

Bundling assert calls()

We have an awful lot of asserts scattered throughout our script now. It might be a good time to group them up into specific functions. It's generally a good idea to take a few breathers here and there when working with your code base. There's almost always some things you can improve, even if it's just simple regrouping or renaming of things. I'm not going to paste the refactoring here because it's again a big blob of code and I just promised to keep that to a minimum in the intro. I also basically just bundled together price_data tests, trading_rule tests, forecast_scaling tests, performance_metric tests and trading_costs assert calls into their own function. You can find the link to the full code down at the bottom of this article as always.

Decoupling Instruments, Rules, Features & Signals

With our asserts in place we can start improving our current implementations structure. We want to be able to generate a stream of signals for any instrument, using any rule on any feature (data column).

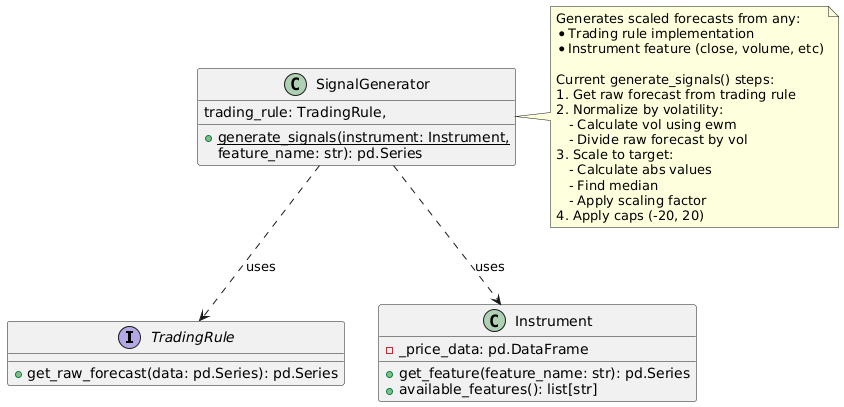

We have the concrete example of rule EMAC(8,32) on feature close prices from BTCUSD. We're going to create a new Instrument class that holds the price data for the instrument and some meta information like contract specs. A diagram for the solution looks like this:

Here's the "top-level" implementation to compare it with its predecessor:

def generate_signals(instrument, trading_rule, feature_name):

try:

feature_series = instrument.get_feature(feature_name)

except ValueError as e:

available = instrument.available_features()

raise ValueError(f"{str(e)}. Available features: {available}")

raw_forecast = trading_rule.get_raw_forecast(feature_series)

instr_vol = feature_series.diff().ewm(span=35, min_periods=10).std()

vol_normalized_forecast = raw_forecast / instr_vol

abs_values = vol_normalized_forecast.abs().expanding(

min_periods=instrument.trading_days_in_year * 2

)

avg_abs_value = abs_values.median()

target_avg = 10.0

scaling_factor = target_avg / avg_abs_value

scaled_forecast = vol_normalized_forecast * scaling_factor

capped_forecast = scaled_forecast.clip(lower=-20, upper=20)

return capped_forecast

trading_frequency = '1D'

symbolname = 'BTC'

feature_name = 'close'

db_store = DBStore()

db_reader = PriceReader(db_store, index_column=0, price_column=1)

price_series = db_reader.fetch_price_series(symbolname, trading_frequency)

instrument = Instrument(

symbolname,

price_series,

trading_days_in_year=365

)

fast_lookback = 8

slow_lookback = fast_lookback * 4

emac = EMAC(fast_lookback, slow_lookback)

signal_series = generate_signals(emac, instrument)

Rebalancing assert() Calls

With that out of the way, we can start working on the rebalancing part. We're going to use the same approach: first porting over the old looping logic, putting some asserts in place, decoupling where possible, and later replacing it with a better, more performant vector-based approach.

The easiest way to check if our ported implementation is working as expected - assuming we were correct before - is to just export the results from our old backtest.py to csv and compare them with our new results.

# exporting old ones

df[['rebalanced_pos_contracts']].to_csv('rebalanced_pos_contracts.csv')

# comparing against new ones

rebalance_err_threshold = 10

positions = generate_rebalanced_positions(signal_series, instrument, rebalance_err_threshold)

expected_positions_df = pd.read_csv('rebalanced_pos_contracts.csv')

positions_array = np.array(positions)

expected_array = expected_positions_df['rebalanced_pos_contracts'].to_numpy()

rel_diff = np.abs(positions_array - expected_array) / np.abs(expected_array)

rtol = 0.0001

if (rel_diff > rtol).any():

print("\nPositions deviating more than {:.2%} relative tolerance:".format(rtol))

print("Index Expected Actual Rel.Diff")

print("-" * 45)

deviant_indices = np.where(rel_diff > rtol)[0]

for idx in deviant_indices:

print(f"{idx:5d} {expected_array[idx]:9.4f} {positions_array[idx]:9.4f} {rel_diff[idx]:9.2%}")

raise AssertionError("Rebalanced positions do not match expected values")

print("All positions within {:.2%} relative tolerance".format(rtol))

And here are some more general assert calls that also make sense:

# More General Tests

assert isinstance(positions, pd.Series), "Rebalanced positions should be a pandas Series"

assert len(positions) == len(price_series), "Length mismatch between positions and price series"

assert positions.index.equals(price_series.index), "Index mismatch between positions and price series"

assert not positions.isnull().all(), "Rebalanced positions should not be all NaN"

assert positions.isnull().sum() < len(positions), "Rebalanced positions should have valid values"

If we run our copy-pasted version everything seems to be working fine. It's time to improve the codes structure again!

Current Rebalancing Implementation

def generate_rebalanced_positions(signals, instrument, rebalance_threshold):

ann_perc_risk_target = 0.20

account_balance = 10_000

ann_cash_risk_target = account_balance * ann_perc_risk_target

contract_unit = instrument.contract_unit

notional_exp_1_contract_series = 1 * instrument.get_feature('close') * contract_unit

daily_instr_perc_risk_series = instrument.get_feature('close').pct_change().ewm(span=35, min_periods=10).std()

daily_contract_risk_series = notional_exp_1_contract_series * daily_instr_perc_risk_series

trading_days_per_year = instrument.trading_days_in_year

daily_cash_risk_target = ann_cash_risk_target / np.sqrt(trading_days_per_year)

contracts_needed_series = daily_cash_risk_target / daily_contract_risk_series

avg_forecast = 10

ideal_pos_series = contracts_needed_series * signals / avg_forecast

ideal_pos_series = ideal_pos_series.fillna(0)

rebalanced_pos_series = pd.DataFrame(index=ideal_pos_series.index, columns=['rebalanced_pos'])

for index in ideal_pos_series.index:

if ideal_pos_series.index.get_loc(index) == 0:

current_pos_contracts = ideal_pos_series[index]

else:

prev_idx = ideal_pos_series.index[ideal_pos_series.index.get_loc(index) - 1]

current_pos_contracts = rebalanced_pos_series.at[prev_idx, 'rebalanced_pos']

if np.isnan(current_pos_contracts):

current_pos_contracts = 0

contract_diff = ideal_pos_series[index] - current_pos_contracts

deviation = abs(contract_diff) / abs(ideal_pos_series[index]) * 100

if deviation > rebalance_threshold:

rebalanced_pos_series.at[index, 'rebalanced_pos'] = ideal_pos_series[index]

else:

rebalanced_pos_series.at[index, 'rebalanced_pos'] = current_pos_contracts

return rebalanced_pos_series['rebalanced_pos']

Now this looks like a lot. And it is. Generally the longer a function the more difficult it is to understand. Decomposing it will almost always make it easier to work with. A good rule of thumb is, whenever you feel the need to comment something, put the codebit commented named after the codes intention so it needs to further clarification.

Decomposing Rebalancing

A great way to stay true to your design while decomposing is to use the design as a template for your interfaces. When we treat each of the 5 rebalancing steps as its own interface, we can come very close to our previous pseudo-code solution using python:

Specify annual risk target (VOL)

Calculate daily risk target (Cash)

Calculate ideal position size based on target & forecast (contracts)

Check how much current position deviates from ideal

Adjust current position if it deviates more than threshold

def generate_rebalanced_positions(signals, instrument, rebalance_threshold):

# Step 1: Annual risk target

account_balance = 10_000

ann_perc_risk_target = 0.20 # 20%

ann_cash_risk_target = calculate_annual_risk_target(

account_balance,

ann_perc_risk_target

)

# Step 2: Convert to daily cash risk target

daily_cash_risk_target = calculate_daily_risk_target(

ann_cash_risk_target,

instrument.trading_days_in_year

)

# Step 3: Calculate ideal position

ideal_position = calculate_ideal_positions(

instrument,

daily_cash_risk_target,

signals

)

# Step 4 & 5: Calculate deviation and rebalance

# Current Rebalancing Logic

[...]

This makes the code already much more readable and easier to understand. There were no comments before but the Step 1-5 comments used here could easily be ommitted due to the names we gave the functions. If I had to choose, I wouldn't leave the comments out before our refactoring. We didn't have them there initially but they would've made it much easier to read the code without doing mental gymnastics.

Usually a big block of code, which is better understood with comments, can be replaced with a function named based on that comment. When trying to pull out these codebits into functions it's usually easiest to find things that are similar or "belong" together. And that is exactly what we did here. But there's still room for improvement!

Improving Rebalancing Further

Other signals for needed decomposition are conditionals and loops. Loops, like in our rebalancing logic, fit nicely into their own functions while conditionals like if/else statements or switches are easier to work with when tugged away behind more general interfaces.

In addition we still have some duplication to get rid off, mainly the VOL calculation. We're also relying on hardcoded local temporary variables for our calculations. And to name even more issues, the current generate_rebalanced_position() function is more of a Facade than a clean interface. Facade objects provide simplified interfaces to complex subsystems with a lot of moving parts unter the hood so it gets easier for us to talk to them. In my opinion though its implementation is way too specific and mixes too many responsibilities which makes it less flexible. Facades usually work best for specific end-user use-cases that get used repeatedly.

Ultimately we want to be able to mix and match different things quickly and easy so I think we should seperate the different concerns like risk targeting, position sizing and volatility estimation better.

I'm not going to show everything in detail here but just the endresult. I will be running the backtester after every change and carefully observe what the assert() calls spit out, circling back and re-evaluating if we deviate from our previous results.

[...]

def generate_rebalanced_positions(ideal_positions, rebalance_threshold):

rebalanced = pd.Series(index=ideal_positions.index, dtype=float)

for idx in ideal_positions.index:

if idx == ideal_positions.index[0]:

rebalanced[idx] = ideal_positions[idx]

continue

prev_pos = ideal_positions.index.get_loc(idx) - 1

current_position = rebalanced.iloc[prev_pos]

if pd.isna(current_position):

current_position = 0

deviation = zero_safe_divide(

abs(ideal_positions[idx] - current_position),

abs(ideal_positions[idx])

)

rebalanced[idx] = ideal_positions[idx] if deviation > rebalance_threshold else current_position

return rebalanced

if __name__ == "__main__":

[...]

# Step 1: Annual risk target

account_balance = 10_000

ann_perc_risk_target = 0.20 # 20%

ann_cash_risk_target = calculate_annual_risk_target(

account_balance,

ann_perc_risk_target

)

# Step 2: Convert to daily cash risk target

daily_cash_risk_target = calculate_daily_risk_target(

ann_cash_risk_target,

instrument.trading_days_in_year

)

# Step 3: Calculate ideal position

ideal_positions = calculate_ideal_positions(

instrument,

daily_cash_risk_target,

signals

)

# Step 4 & 5: Calculate deviation and rebalance

rebalance_err_threshold = 0.10

rebalanced_positions = generate_rebalanced_positions(

ideal_positions=ideal_positions,

rebalance_threshold=rebalance_err_threshold

)

Quick summary what I did here: decomposed the specific interface by splitting calculation for ideal_positions and reabalancing into their own functions that have only one responsibility. Moved flow of logic to the top level __main__ function. Removed duplicated VOL calculation code. Migrated all tests into their own file tests.py.

The full code can be found in this GitHub repository

Future Improvements

There's still a lot we can improve. We're heavily reliant on pandas dataframes. A more general interface would make it easier to switch out the underlying datastructure in the future. Before we do this though, we can explore the concept of vectorization using our current rebalancing loop as example. After having a look at both concepts in isolation we can probably bundle them up into one nice big refactoring.

There are still a handful of so called magic numbers (fancy term for hardcoded literals) throughout the code. Introducing a Config object to hold them might be a good idea. For now I've just put them into constants at the beginning of the execution.

We should also take the time to talk about error handling. It's always a good idea to standardize error handling where possible. Just like we did last time, the error messages we'll encounter and try/except on will be far more helpful than the cryptic, generic ones.

This article is already quite long though. So the only thing left to say now is - as always:

Happy coding!

- Hōrōshi バガボンド

Disclaimer: The content and information provided by Vagabond Research, including all other materials, are for educational and informational purposes only and should not be considered financial advice or a recommendation to buy or sell any type of security or investment. Vagabond Research and its members are not currently regulated or authorised by the FCA, SEC, CFTC, or any other regulatory body to give investment advide. Always conduct your own research and consult with a licensed financial professional before making investment decisions. Trading and investing can involve significant risk, and you should understand these risks before making any financial decisions. Backtested and actual historic results are no guarantee of future performance. Use of the material presented is entirely at your own risk.