Refactoring - Part III

Composition Over Inheritance

Table Of Contents

Recap

Refused Bequest

Graceful Error Handling

Profiling The SQL Query

Using A Composite Index

Tight Coupling

Composition Over Inheritance

Composition Solution Overview

Next Up

Recap

In our last article we started refactoring the structure of our backtesters code to make it easier to work with in the future. It turned out that in order to port over the calculations, we need to kind of port over everything from our old backtest. Using assert() calls as a test-suite proxy, we started porting over the logic to fetch historical prices while trying to migrate the implementation to calculate our strategies pre-cost sharpe ratio. Today we're going to continue that process.

But before we do anything else, let's quickly fix a bug we introduced while porting the price fetching logic for multiple datasources.

Refused Bequest

Right now our DBreaders fetch_raw_price_series() doesn't use the trading_frequency parameter at all. This is bad! Having unused code is an anti-pattern and should be avoided.

In fact, if we were using a compiled language, the compiler would squawk at us with something along the lines of warning: unused variable: trading_frequency.

This anti-pattern or code-smell is called refused bequest. We're breaking at least two of the SOLID principles here, namely the Interface Segregation Principle (ISP) and Liskov Substitution Principle (LSP).

ISP states that "clients should not be forced to depend upon interfaces they do not use." We're breaking it because we're not using the interface to its full extend.

According to the LSP, objects of a superclass should be replaceable with objects of its subclasses without breaking the application. Right now we would talk to the CSVReader (fetch_price_serieS('BTC', '1D')) differently than to the DBReader (fetch_price_series('BTC')) even though they are both subclasses of PriceDataSource. The application wouldn't necessarily crash but that's just because python is more lax than other languages.

Luckily for us, in this case it's not really a design flaw but rather a bug. We just didn't need to specify the timeframe for our pricedata when fetching from the database yet because we're only working with EOD data. Whenever we're requesting data from the DBreader we always get the trading timeframe 1D. When reading from .csv files however, we needed to specify the timeframe because the source of the data was embedded in the files name: BTC_1D.csv. Ultimately we want to be able to fetch lots of different price series using both readers.

We can simply get rid of this bug by incorporating the trading_frequency in the SQL phrase. From our init.sql file we can see that the tables column name is interval:

CREATE TABLE IF NOT EXISTS ohlcv (

id SERIAL PRIMARY KEY,

coin_id INTEGER REFERENCES coins(id),

time_open TIMESTAMP,

time_close TIMESTAMP,

time_high TIMESTAMP,

time_low TIMESTAMP,

open DOUBLE PRECISION,

high DOUBLE PRECISION,

low DOUBLE PRECISION,

close DOUBLE PRECISION,

volume DOUBLE PRECISION,

market_cap DOUBLE PRECISION,

interval VARCHAR(120)

);To use trading_frequency we simply have to mix it into our SQL query string:

cur.execute(f"""

SELECT ohlcv.time_close, ohlcv.close

FROM ohlcv

JOIN coins ON ohlcv.coin_id = coins.id

WHERE coins.symbol = '{symbolname}'

AND ohlcv.interval = '{trading_frequency}'

ORDER BY ohlcv.time_close ASC;

""")

If we run the backtester_refactored.py we get no errors (other than AttributeError: 'NoneType' object has no attribute 'shift' on rebalanced_positions.shift(1) but that's just because we didn't implement it yet. We're still working on fetch_price_series(). Great, that seems to be working. What happens if we put in something different than 1D though?

# KeyError: 'time_close'That's not from our assert() call! Something is going wrong during the data fetching so our assert() call doesn't even get the chance to check the price series for validity.

Graceful Error Handling

It's honestly no wonder that the application is breaking. If we specify something different than 1D as trading_frequency we won't be able to fetch it from the database yet because we only have 1D data in it!

The error message we're seeing however is rather cryptic. It doesn't tell us about this. Of course, with a little bit of brainpower, we can arrive at the conclusion that there might be no data returned. But there is a more elegant solution that saves us this brainpower!

If we build in a way to handle the case when no data was found, we can first of all save us the hassle of debugging it ourselves, but also enable the clients that use the fetch_price_series() function to realize a faulty state and react to it.

class NoDataFoundError(Exception):

"""Raised when a query returns no data"""

pass

class DBReader(PriceDataSource):

def fetch_raw_price_series(self, symbolname, trading_frequency):

[...]

cur = conn.cursor()

cur.execute(f"""

SELECT ohlcv.time_close, ohlcv.close

FROM ohlcv

JOIN coins ON ohlcv.coin_id = coins.id

WHERE coins.symbol = '{symbolname}'

AND ohlcv.interval = '{trading_frequency}'

ORDER BY ohlcv.time_close ASC;

""")

rows = cur.fetchall()

cur.close()

conn.close()

if not rows:

raise NoDataFoundError(f"No data found for symbol '{symbolname}' with frequency '{trading_frequency}'")

return pd.DataFrame(rows)If we now run it again, we get data_sources.NoDataFoundError: No data found for symbol 'BTC' with frequency '3D'. This is far more helpful than KeyError: 'time_close'. Clients, which our backtester is one of, using the fetch_price_series() interface can now react to it:

trading_frequency = '3D'

symbolname = 'BTC'

index_column = 0

price_column = 1

db_reader = DBReader(index_column, price_column)

from data_sources import NoDataFoundError

try:

price_series = db_reader.fetch_price_series(symbolname, trading_frequency)

except NoDataFoundError:

# do stuff in case we couldn't find dataUsing custom (or built-in) exceptions in combination with try/except blocks is one of the easiest ways to make your application more greaceful. It can be used to prevent crashes by handling edge cases and makes failures more predictable. Any time your application runs into a yet undefined state - like the cryptic time_close error before - it's worth analysing it a little more and provide more meaningful messages that allow for fallback behaviour.

Profiling The SQL Query

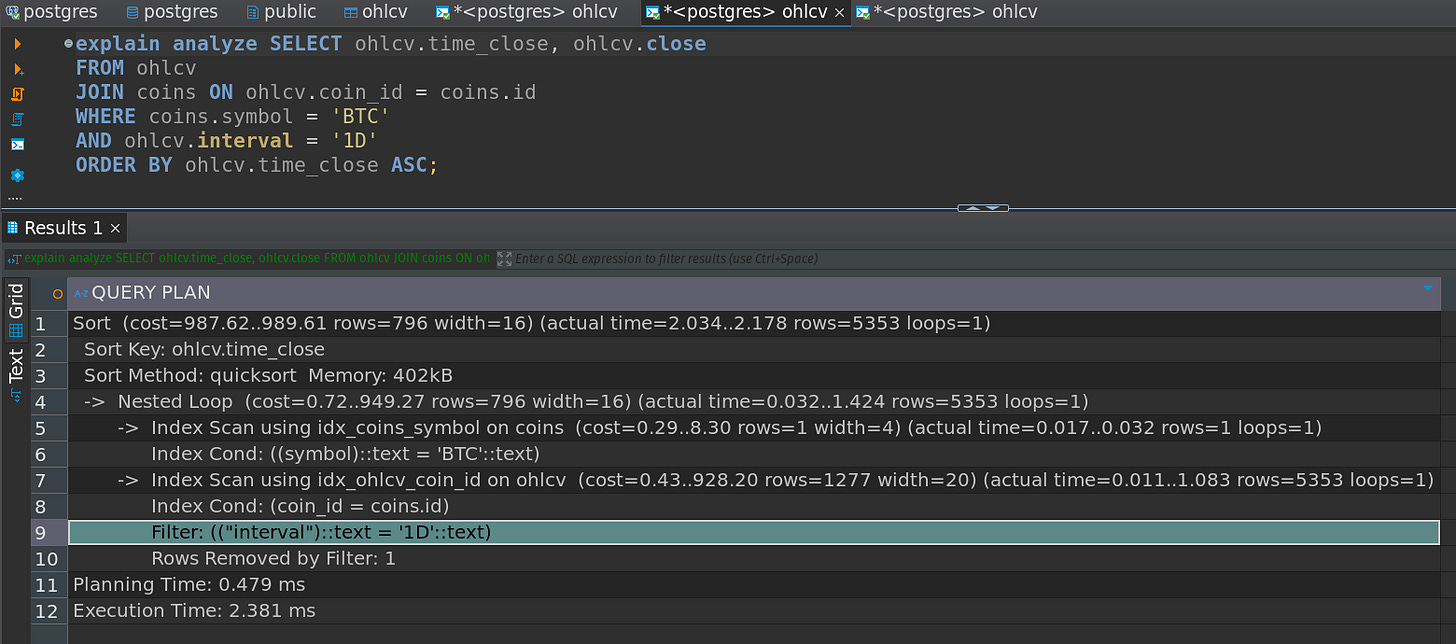

Let's quickly have a look at the sequence of steps the database optimizer is choosing when executing our new search query using the EXPLAIN ANALYZE statement. If you don't know what the hell I'm talking about, read this article first!

Surprisingly the query planner comes up with a very efficient execution that uses INDEX SCANS on each column. Don't get fooled by this! We only have 1D data in our database so there isn't really much for the optimizer to sift through. When we start adding different frequencies and data origins, things can become quite messy quickly, especially when using lots of lower timeframe data.

Since we're now quering for more than one column, it's a good idea to index it! In reality it's not all that much of a difference right now because the coin_id is still unique. In the future though we're going to fetch, persist and retrieve data from more than one datasource. Different exchanges might have different prices for each coin.

Even though we didn't specify the future database structure and how to model that relationship between coins, their prices and frequencies across different exchanges yet, it's still a good idea to have a look at some mapping techniques we can use to increase the queries performance.

Using A Composite Index

We can create a compound index that includes both coin_id and interval like so:

CREATE INDEX idx_ohlcv_cid_interval_composite ON ohlcv(coin_id, interval);A composite index works great if the coin_id happens to become non-unique. For example if we continue to persist data from different exchanges for the same coin in one table, the SQL optimizer won't be able to use an INDEX SCAN on it anymore. We need to team coin_id up with an additional column that makes it unique in conjunction again.

Again, in this case it doesn't make much of a difference, but it's a good example to illustrate the process anyway. Since we've created a composite index on coin_id and interval, each query looking for these columns in combination will be treated as a primary key again, enabling the optimizer to use an INDEX SCAN. We're going to do the same thing on origin later when we introduce different exchanges as dataproviders.

The order of a concatenated index has impact on its usability! The database can't use single columns from a concatenated index arbitrarily. A composite index is just a B-tree that keeps the data indexed in a sorted list (again, see here). The first column coin_id is the primary sort criterion. The second column interval only determines the order if two entries have the same value in the first column. So it first sorts by coin_id and then by interval. If you all of a sudden start searching only for interval it would be like flipping through a phonebook trying to find someone by his first name. The SQL optimizer cannot use an INDEX SCAN and would very likely choose the costly and time consuming FULL TABLE SCAN mechanism instead. Searching for coin_id alone however still works because it's the first sorting technique used.

Tight Coupling

Now back to topic! Improving the structure of our code.

Our current solution, even though it improved things by removing duplication, is not really a good one in my opinion. It is inheritance based, which comes with its own challenges and problems.

Both, DBReader and CSVReader extend PriceDataSource and are therefor permanently coupled to it. They - and any other Reader class extending it - must implement all abstract methods. While it's true that our abstract base class PriceDataSource defines a general contract to work with, it also enforces implementation details and couples the data fetching with its processing. Changes to the base class also force changes to all derived classes, which might not be what we want all the time. In addition it'll be harder to isolate the database or processing logic to test and mock away dependencies.

To make it easier to test and work with our design, we can decouple it a bit using another approach.

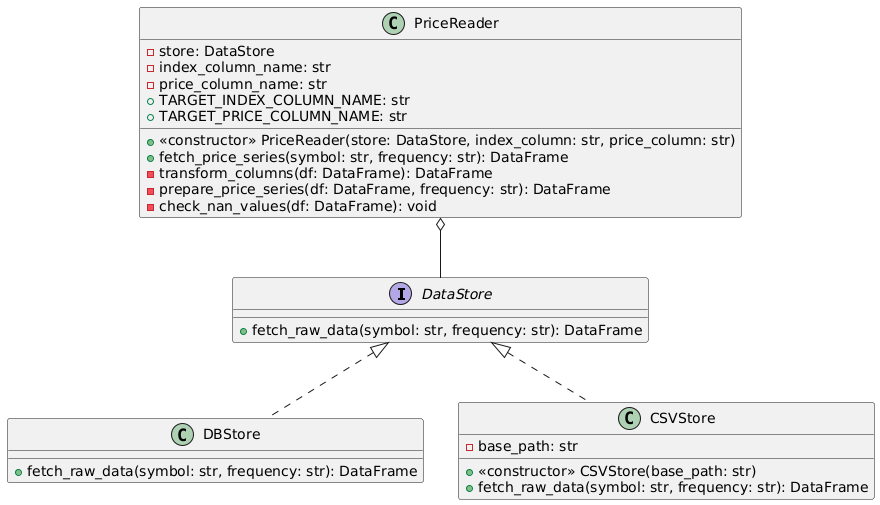

Composition Over Inheritance

I'd argue that the data stores don't really need to know anything about the price processing and the price processing also doesn't need to know anything about the data source implementations. We should decouple them by creating two separate interfaces, one for the data access, one for the data processing.

from abc import ABC, abstractmethod

# Abstract interface for data stores - defines minimal contract

class DataStore(ABC):

@abstractmethod

def fetch_raw_data(self, symbol, frequency):

"""Fetch raw price data from source"""

pass

# Main price processing class - uses composition via DataStore dependency

class PriceReader:

def __init__(self, store):

# Dependency injection of data store

self.store = store

def fetch_price_series(self, symbol, frequency):

pass

def transform_columns(self, df):

pass

def prepare_price_series(self, df, frequency):

passThe DataStore class still acts as a common baseclass among concrete data store implementations. It's only job is to enforce data stores to implement the fetch_raw_data() interface. Without it a datastore wouldn't make any sense.

The new PriceReader class is kind of like our PriceDataSource class before. It acts as the preprocessing unit of the data we want to work with in our backtester. Of course it needs data to process just as before. We decoupled that bit though!

Before, the PriceDataSource class was the base class to extend, defining an abstract method named fetch_raw_price_series(). Just as with our new DataStore class, any class extending it had to implement it. But in addition, any class that extended the PriceDataSource also had the logic to process the data.

This isn't the case anymore! Let me show you what I mean:

# Concrete implementation for CSV access - only responsible for file operations

class CSVStore(DataStore):

def __init__(self, base_path):

self.base_path = base_path

def fetch_raw_data(self, symbol, frequency):

file_path = f"{self.base_path}/{symbol}_{frequency}.csv"

return pd.read_csv(file_path)The CSVStore now extends the DataStore class instead of the PriceReader class. It does know nothing about any processing logic. It only knows how to retrieve and return raw data.

To process data, we're going to create an instance of this CSVStore class and then pass it into the PriceReader as datasource instead.

csv_store = CSVStore("./")

csv_reader = PriceReader(csv_store, 'timestamp', 'price')

price_series = csv_reader.fetch_price_series("BTC", "1D")We then call the fetch_price_series() function on our PriceReader instance, which delegates that call to whatever Store class we injected while creating it.

class PriceReader:

def __init__(self, store):

# Dependency injection of data store

self.store = store

def fetch_price_series(self, symbol, frequency):

# Delegates data fetching to injected store

raw_df = self.store.fetch_raw_data(symbol, frequency)

return self.prepare_price_series(raw_df, frequency)The PriceReader then receives the data from its store and processes and returns it for further computation. The processing method still takes care of transforming the column names, indexing and sorting the data, checking fo NaNs, etc. as before.

Composition Solution Overview

Our new solution looks like this:

Each store now handles only its data accessing logic. They possess no knowledge of any processing. We can isolate them seamlessly which will make testing in the future much easier. New data sources don't require processing changes, which models a better seperation of concerns.

The PriceReader now processess data independently of source. We simply inject the data store into it which makes it more in line with the Single Responsibility Pattern (SRP) overall. Its main responsibility is to process data!

The Readers depend on the interface of DataStore instead of its implementation. Changes to processing don't affect stores and vice versa. This technique is called Dependency Inversion Principle (DIP). It enables us to swap implementations of fetch_raw_price_series without changing PriceReader at all. We can easily add new data sources without modifying existing code, which is what the Open/Closed Principle is all about.

As you can see, when we're staying true to the SOLID principles, everything kind of falls in to place. Focusing on loose coupling during the process makes the code more maintainable, testable and also more flexible by reducing the dependencies between components.

Funnily enough, due to this we god rid of some little nagging quirks in our code. For example the CSVReader had this weird super().__init() call before, where we had to manually call the parent PriceDataSource classes constructor to pass through the index_column and price_column names. This is gone now because it doesn't care about it anymore. That's the PriceReaders job now.

# Before

class CSVReader(PriceDataSource):

def __init__(self, base_path, index_column, price_column):

super().__init__(index_column, price_column)

self.base_path = base_path

# After

class CSVStore(DataStore):

def __init__(self, base_path):

self.base_path = base_pathThe full code can be found here.

Next Up

Last week we covered a lot of general refactoring and testing ground. This week we did the same with object-oriented programming by scratching its surface a little more. We're going to use all of this in the upcoming articles to finish restructuring our backtesters code and then finally move on to more trading related content again.

So long, happy coding!

- Hōrōshi バガボンド

Disclaimer: The content and information provided by Vagabond Research, including all other materials, are for educational and informational purposes only and should not be considered financial advice or a recommendation to buy or sell any type of security or investment. Vagabond Research and its members are not currently regulated or authorised by the FCA, SEC, CFTC, or any other regulatory body to give investment advide. Always conduct your own research and consult with a licensed financial professional before making investment decisions. Trading and investing can involve significant risk, and you should understand these risks before making any financial decisions. Backtested and actual historic results are no guarantee of future performance. Use of the material presented is entirely at your own risk.