Turning Trading Rule Signals Into Forecasts

Table Of Contents

Recap

Sanitizing Our Database

Turning EMAC Into A Forecast

A Very Simple Forecast

One Forecast To Rule Them All

Volatility Normalizing The Forecast

Forecast Absolute Average Values

Scaling The Forecasts Absolute Average

Updating Our C.O.R.E Recipe

Summary

Recap

Last week we coded our first trading rule - the Exponential Moving Average Crossover [short EMAC] - using the python library pandas and talked about some basic data cleaning operations. This week we're going to take a closer look at the EMAC and how we can use it to predict future price movements, which we want to trade.

Sanitizing Our Database

Let's first quickly talk about some database sanitization first. While fetching historical prices for BTC using our datahub, we encountered multiple coins that used the symbol BTC. This is obviously nonsense since each ticker should be unique. Let's check if there are more of these in our datahub:

SELECT COUNT(*)

FROM coins

WHERE symbol IN (

SELECT symbol

FROM coins

GROUP BY symbol

HAVING COUNT(*) > 1

);It looks like there exist a lot of them: count: 3,075

What should we do about them?

There are a few possibilities to approach this problem:

Ignore it, do nothing at all

Delete all of them

Migrate all of them into a temporary table for later inspection

Analyze them, find out their "real symbol" and update them in our db

Keep one of them as the original, create random symbols for the duplicates

Since we want to focus on developing our trading rules further right now, I'd opt for option 1, ignoring. Just for now! We already jotted down that we need to handle them and fixed the ones for BTC on the fly last week. IMO We don't face any further immediate problem!

This doesn't mean we're going to ignore them forever though. At some point we probably need to do something about it but right now they are not of high interest. Fixing all of them doesn't help us with what we're currently doing. In addition they also might be no real problem at all in the future: If we're going to adopt a top20/top50 mcap sort of portfolio, we won't be pulling data for most of these 3000+ entries anyway.

Depending on which exchanges we end up trading at, we probably can exclude a lot of them. There's no sense in researching tickers you can't actually trade! They also don't really slow down our data fetching because we're using a b-tree index. After all this is just a research lab.

If at some point in the future we encounter more problems with duplicated symbols in our dataset, we're definitely going to fix it right on the spot and maybe even come up with a generic solution.

We could take a tiered approach: migrate them over to another table, selectively analyze and fix them whenever we need them, or even source an entirely new set of historical prices from a different provider. The "best" solution depends on what strategy and infrastructure we come up with in the end. Right now we're still in the very early development phase of our system so keeping this in the back of our head is enough.

It's easy to get down all sorts of rabbit holes while developing and it's almost always a bad idea to chase them if they aren't directly related to the immediate task at hand!

So let's get back to our current task at hand:

Turning EMAC Into A Forecast

Trading rules are all about forecasting. Betting our money on markets doesn't make any sense if we're not able to predict where price is likely going. Right now, we're only using the raw EMAC value to gauge if the current state of the market leans more towards an up or a down move. This is a forecast! We're predicting what's likely to happen in the future.

This forecast however - in its current form - is rather meaningless. It only tells us which direction the market will probably go, not how likely it is or how much we should bet on it. If we circle back to our generic EV discussion, it's clear that we're missing important parts so we can manage our risk to reduce the risk of ruin.

Forecasting is all about analyzing raw information and processing it into alphas, the source of our excess returns.

It's important to understand that these concepts won't translate 1:1 to our EV article. We won't be able to pinpoint a precise probability of winning or losing but what we're going to do is heavily related.

A Very Simple Forecast

If we have a look at the EMAC values again, we can see that they aren't binary. They are not either positive or negative! Sure, you can look at them like that but there's more information in there.

import pandas as pd

import psycopg2

from dotenv import load_dotenv

import os

import matplotlib.ticker as ticker

import matplotlib.pyplot as plt

load_dotenv()

conn = psycopg2.connect(

dbname=os.environ.get("DB_DB"),

user=os.environ.get("DB_USER"),

password=os.environ.get("DB_PW"),

host=os.environ.get("DB_HOST"),

port=os.environ.get("DB_PORT")

)

symbolname = 'BTC'

cur = conn.cursor()

cur.execute(f"""

SELECT ohlcv.time_close, ohlcv.close

FROM ohlcv

JOIN coins ON ohlcv.coin_id = coins.id

WHERE coins.symbol = '{symbolname}'

ORDER BY ohlcv.time_close ASC;

""")

rows = cur.fetchall()

cur.close()

conn.close()

df = pd.DataFrame(rows, columns=['time_close', 'close'])

df['time_close'] = pd.to_datetime(df['time_close']).dt.strftime('%Y-%m-%d')

df.set_index('time_close', inplace=True)

fast_lookback = 8

slow_lookback = fast_lookback * 4

df['ema_fast'] = df['close'].ewm(span=fast_lookback).mean()

df['ema_slow'] = df['close'].ewm(span=slow_lookback).mean()

df['raw_forecast'] = df['ema_fast'] - df['ema_slow']

# time_close close ema_fast ema_slow ema_crossover

# 5257 2024-12-04 98768.527555 96596.377356 89442.172271 7154.205086

# 5258 2024-12-05 96593.572272 96595.754004 89875.590453 6720.163552

# 5259 2024-12-06 99920.714730 97334.634166 90484.385863 6850.248302

# 5260 2024-12-07 99923.336619 97909.901378 91056.443485 6853.457893

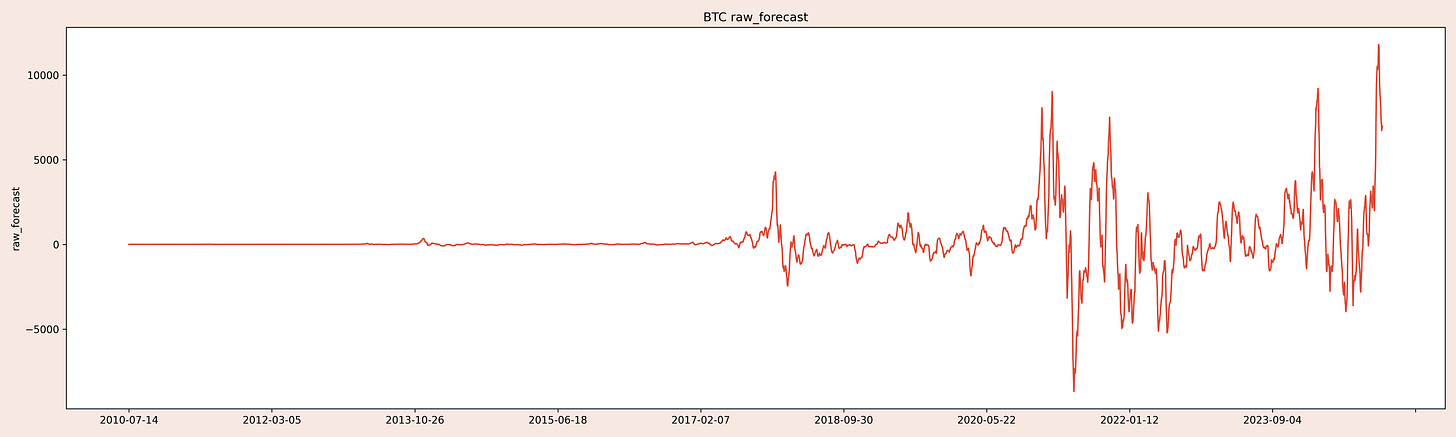

# 5261 2024-12-08 101236.013140 98649.037325 91673.387100 6975.650224All of the EMACs positive values range from 0 to ∞. The same is true for negative values, with -∞ to 0. We can use this information to improve our forecast. Stronger forecasts should lead to bigger positions because we expect bigger returns. Whenever new data comes in, we should recalculate the forecast and adjust our position accordingly.

One Forecast To Rule Them All

The above EMAC represents our raw forecast, we didn't process in any way yet. This means that depending on the instrument we're trading the forecast will be very different:

# Raw EMAC Forecast for BTC

# close ema_fast ema_slow ema_raw

# time_close

#2024-12-04 98768.527555 81490.252534 66473.906652 15016.345882

# 2024-12-05 96593.572272 81954.970064 66708.300937 15246.669128

# 2024-12-06 99920.714730 82507.762208 66966.763301 15540.998907

# 2024-12-07 99923.336619 83043.626036 67223.234689 15820.391347

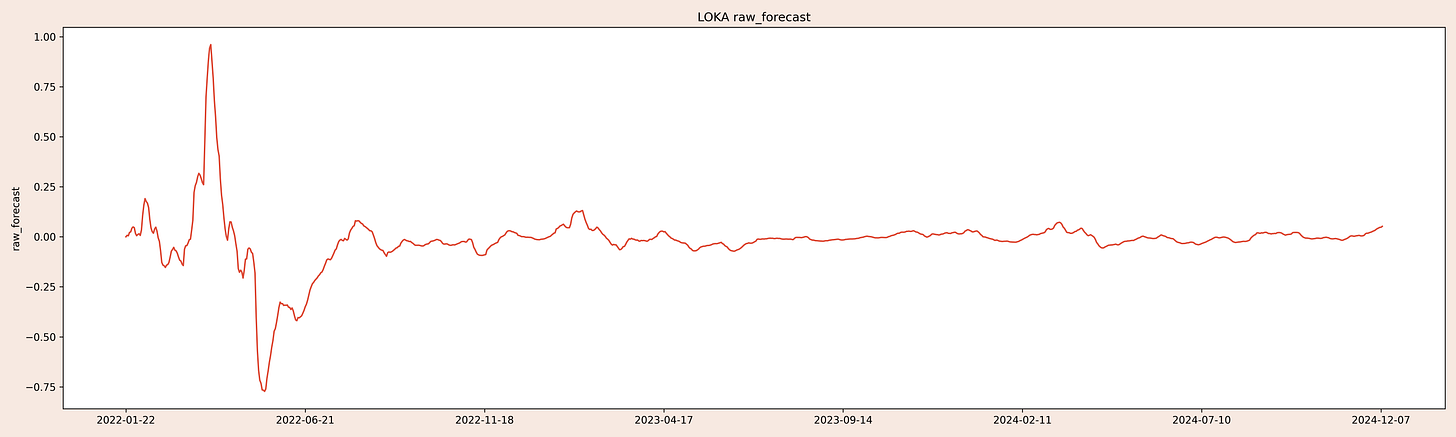

# 2024-12-08 101236.013140 83603.391793 67487.925571 16115.466222# Raw EMAC Forecast for LOKA

# close ema_fast ema_slow ema_raw

# time_close

# 2024-12-04 0.297104 0.213040 0.224075 -0.011035

# 2024-12-05 0.301703 0.215768 0.224679 -0.008911

# 2024-12-06 0.311765 0.218722 0.225357 -0.006635

# 2024-12-07 0.303318 0.221325 0.225964 -0.004639

# 2024-12-08 0.334855 0.224818 0.226811 -0.001994The raw forecast values of BTC and LOKA differ significantly. If we were to translate them into position sizings, we'd need to wrangle with them independently. A good forecast should mean the same in every context! We need to scale our forecasts so they are consistent across instruments.

Rob Carver has a nice article on this topic. By finding and using a scaling factor, we can bring all forecasts to the same scale.

By the way, you probably noticed me reference Carver a few times now. This is no coincidence! Carver is probably the single best resource out there for anyone looking to get started with systematic trading. The wisdom scattered across his books and blogposts is insanely helpful and precise. If I could choose only one author to focus on when getting started, I'd choose Carver every time!

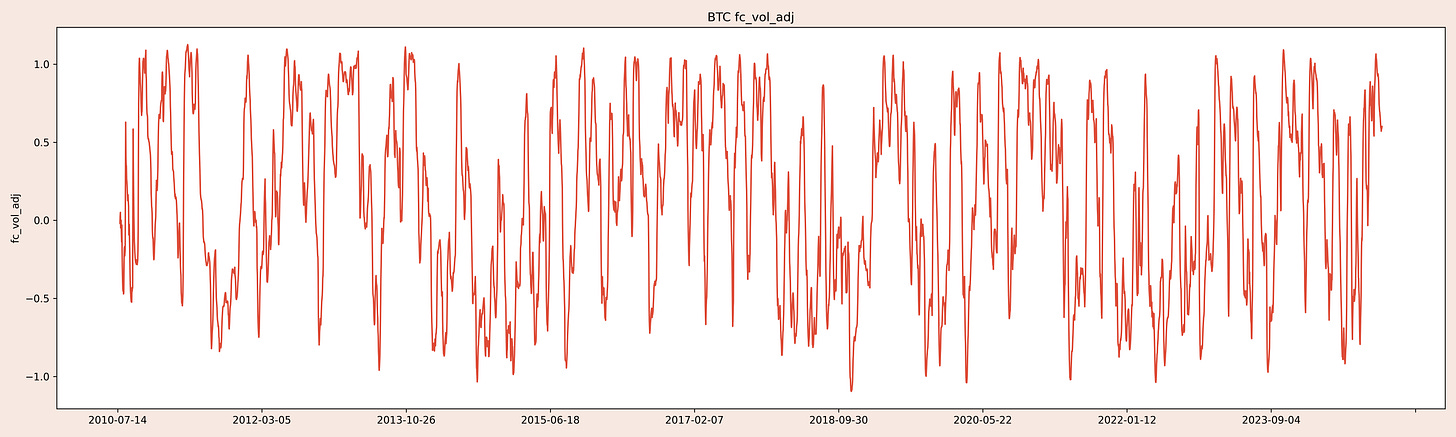

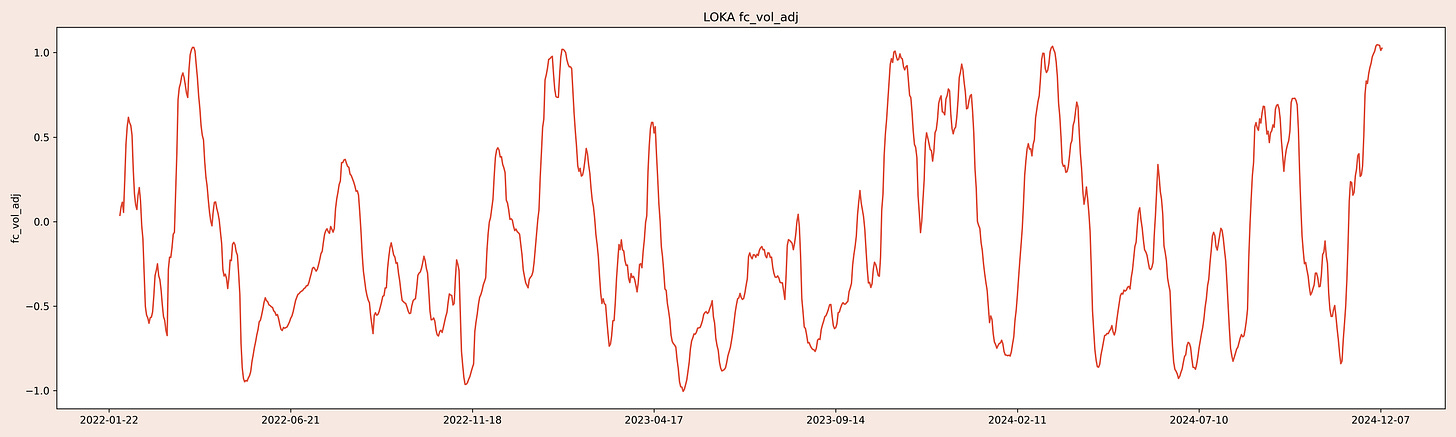

Volatility Normalizing The Forecast

First things first: to make the forecast more consistent across instruments, we can adjust them by their respective volatility. There are lots of different techniques you could use - std_dev, ATR, etc. - and as always the choice is up to you. We like to keep the approach consistent and will continue to use the good ol' standard deviation of the instruments returns to adjust for volatility. We're also going to smooth it out a little by using an EMA on the returns, which gives more weight to recent data - just like our EMAs for the forecast itself.

[...]

df['price_vol'] = df['close'].ewm(span=35, min_periods=10).std()

df['fc_vol_adj'] = df['raw_forecast'] / df['price_vol']

# Forecasts for BTC

# close raw_fc fc_vol_adj

# time_close

# 2024-12-04 98768.527555 7154.205086 0.598169

# 2024-12-05 96593.572272 6720.163552 0.570758

# 2024-12-06 99920.714730 6850.248302 0.584361

# 2024-12-07 99923.336619 6853.457893 0.588590

# 2024-12-08 101236.013140 6975.650224 0.601047# Forecasts for LOKA

# close raw_fc fc_vol_adj

# time_close

# 2024-12-04 0.297104 0.041228 1.047331

# 2024-12-05 0.301703 0.044083 1.044628

# 2024-12-06 0.311765 0.047201 1.042743

# 2024-12-07 0.303318 0.047478 1.013281

# 2024-12-08 0.334855 0.052138 1.025923

This looks better already, both instruments adjusted forecasts seem to oscilate between 1 and -1.

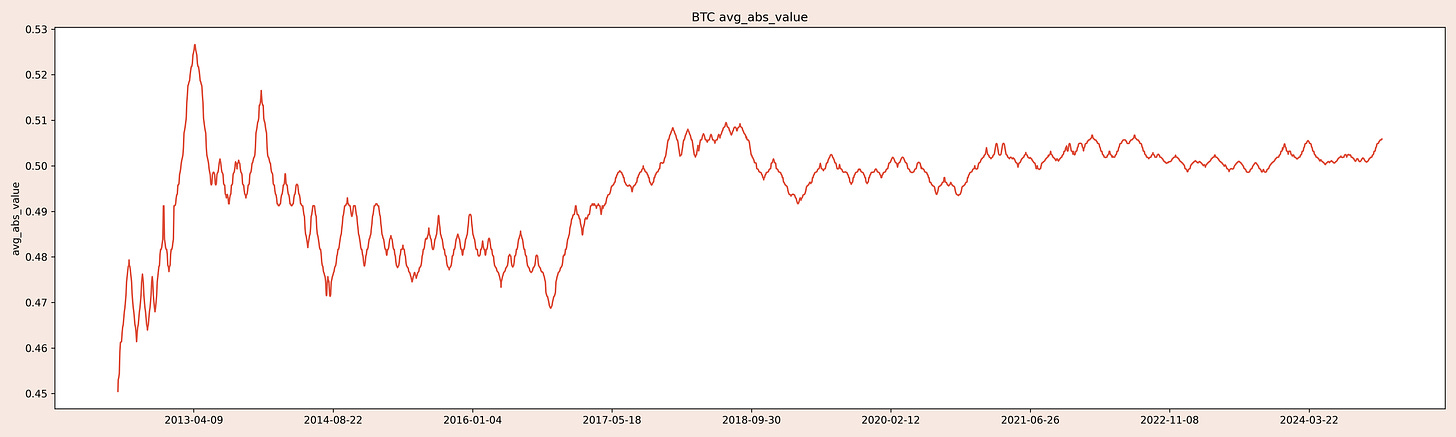

Forecast Absolute Average Values

From Carvers article:

The basic idea is that we're going to take the natural, raw, forecast from a trading rule variation and look at it's absolute value. We're then going to take an average of that value. Finally we work out the scalar that would give us the desired average (usually 10).

If we can achieve it so that the average absolute forecast of each instrument is around 10, we'd be in a much more normalized world where everything means about the same again. This means that each time the forecasts reads 10 (or -10 for shorts), we'd open an average sized position, each time it shows 20 or -20, our position would be double our average size. How do we do this? Simple! We just need to divide 10 by the instruments average absolute value:

[...]

print(symbolname)

abs_values = df['fc_vol_adj'].abs()

avg_abs_value = abs_values.mean()

print(avg_abs_value)

# BTC

# 0.5123830047105346This is only one value though, we want to get a time series so we can use it to scale our forecasts over time in our backtest. Carver suggests to use the median instead of the mean to make the average more resilient against big outliers. He also suggests to use an expanding window instead of a rolling one with a default of 1 to 2 years of daily data to make the forecast consistent over time. 10 should mean the same thing in 2020 as it did in 2010.

[...]

print(symbolname)

abs_values = df['fc_vol_adj'].abs().expanding(min_periods=720)

df['avg_abs_value'] = abs_values.median()

print('avg_abs_val', df['avg_abs_value'].tail(5))

# BTC

# time_close

# 2024-12-04 0.505684

# 2024-12-05 0.505691

# 2024-12-06 0.505697

# 2024-12-07 0.505786

# 2024-12-08 0.505874

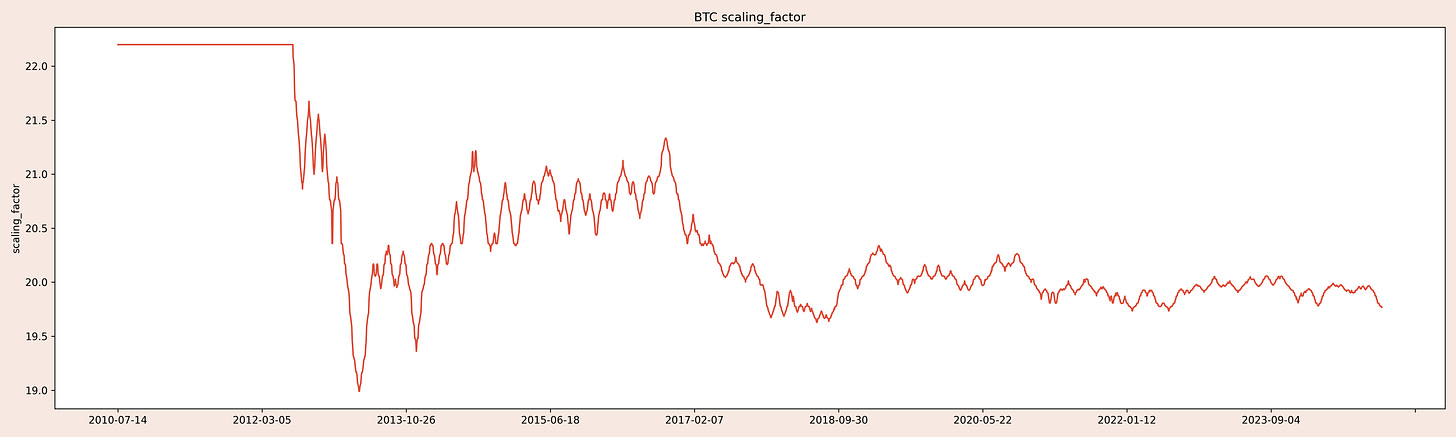

# Name: fc_vol_adj, dtype: float64Scaling The Forecasts Absolute Average

Now that we have the forecasts absolute average (median) as a timeseries, we can divide 10 by each one of its values to get the needed scale factor and backfill the the NaN periods at the beginning that got created because of too few datapoints in the expanding 2 year window.

[...]

target_avg = 10.0

df['scaling_factor'] = target_avg / df['avg_abs_value']

df['scaling_factor'] = df['scaling_factor'].bfill()

print(df[['close', 'raw_forecast', 'fc_vol_adj', 'scaling_factor']].tail(5))

# BTC

# close raw_fc fc_vol_adj scaling_factor

# time_close

# 2024-12-04 98768.527555 7154.205086 0.598169 19.775179

# 2024-12-05 96593.572272 6720.163552 0.570758 19.774925

# 2024-12-06 99920.714730 6850.248302 0.584361 19.774671

# 2024-12-07 99923.336619 6853.457893 0.588590 19.771218

# 2024-12-08 101236.013140 6975.650224 0.601047 19.767767To scale our forecasts to an average absolute value of 10 all we have to do now is to multiply it with its scaling factor. We're also going to cap its maximum values to -20 and 20 to shield us from extreme oscillation readings where mean reversion is much more likely. There's probably nothing worse for a trend following strategy than to enter into a 4x average position due to an extreme forecast reading at "the end" of a trend just before it reverses.

[...]

df['scaled_forecast'] = df['fc_vol_adj'] * df['scaling_factor']

df['capped_forecast'] = df['scaled_forecast'].clip(lower=-20, upper=20)

print(df[['close', 'raw_forecast', 'fc_vol_adj', 'scaling_factor', 'capped_forecast']].tail(5))

#BTC

# close raw_forecast fc_vol_adj scaling_factor capped_forecast

# time_close

# 2024-12-04 98768.527555 7154.205086 0.598169 19.775179 11.828902

# 2024-12-05 96593.572272 6720.163552 0.570758 19.774925 11.286687

# 2024-12-06 99920.714730 6850.248302 0.584361 19.774671 11.555543

# 2024-12-07 99923.336619 6853.457893 0.588590 19.771218 11.637141

# 2024-12-08 101236.013140 6975.650224 0.601047 19.767767 11.881364If we now compare the average forecast across instruments they all look pretty much the same:

[...]

print(symbolname)

print('avg_rescaled_abs_fc_capped', df['capped_forecast'].abs().mean())

[...]

# BTC

# avg_rescaled_abs_fc_capped 10.391611812218311

# LOKA

# avg_rescaled_abs_fc_capped 10.442839702210362

# TRX

# avg_rescaled_abs_fc_capped 10.239200310811853

# ETH

# avg_rescaled_abs_fc_capped 8.925330666767259The only one that differs is ETH. We're going to come to that later in the series, this article is alread quite long. A little hint: it's not the real ETH (remember our BTC1-5 workaround) and it has way fewer data points than the others.

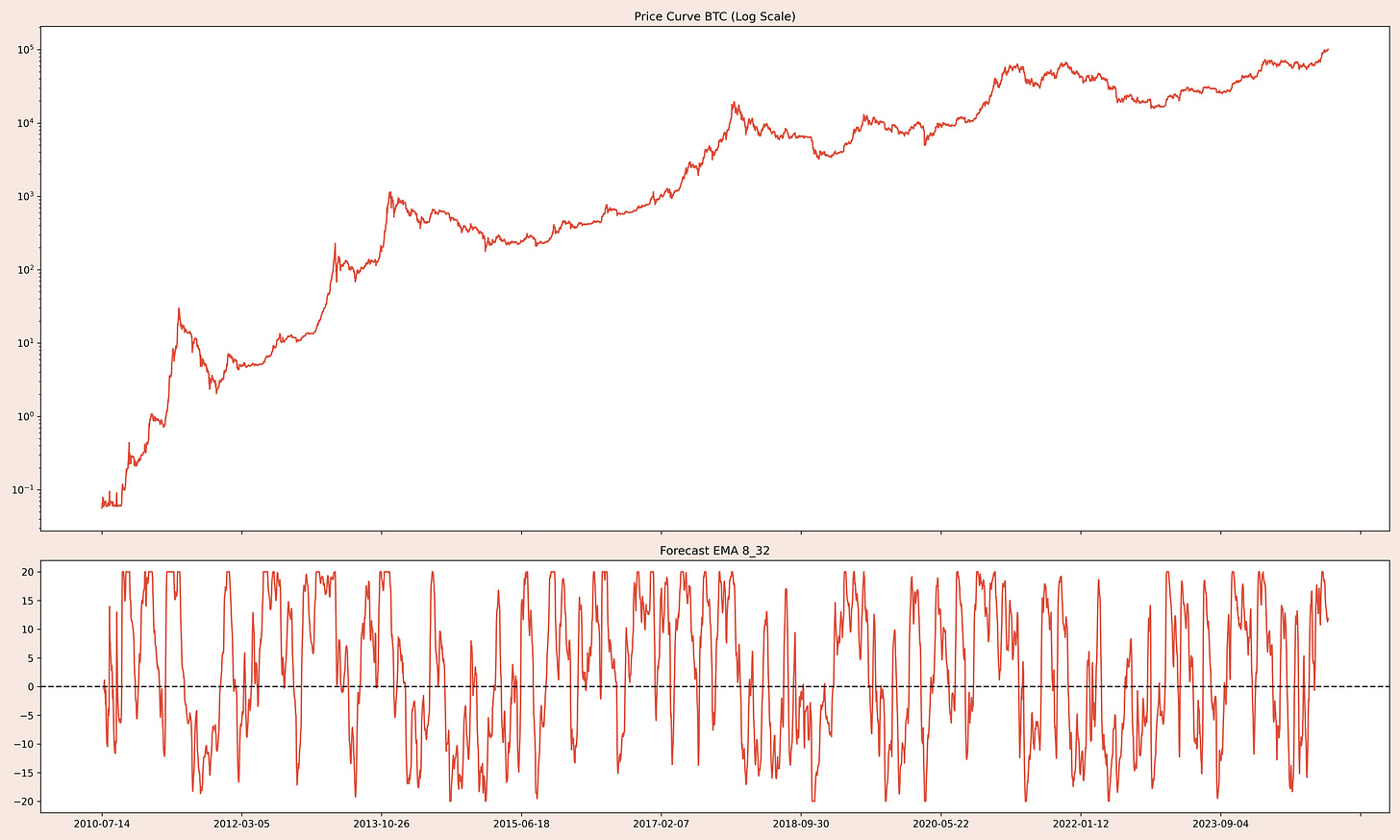

Let's plot what we created here for BTC:

[...]

fig, (ax1, ax2) = plt.subplots(2, 1, sharex=True, figsize=(20, 12),

facecolor='#f7e9e1',

gridspec_kw={'height_ratios': [2, 1]})

ax1.plot(df['close'].index, df['close'], color='#de4d39', label=f'Price {symbolname}')

ax1.set_yscale('log')

ax1.set_title(f'Price Curve {symbolname} (Log Scale)')

ax2.plot(df['close'].index, df['capped_forecast'], color='#de4d39', label=f'Forecast EMA {fast_lookback}_{slow_lookback}')

ax2.axhline(y=0, color='#100d16', linestyle='--', label='Zero Line')

ax2.set_title(f'Forecast EMA {fast_lookback}_{slow_lookback}')

ax2.xaxis.set_major_locator(ticker.MaxNLocator(nbins=10))

plt.tight_layout()

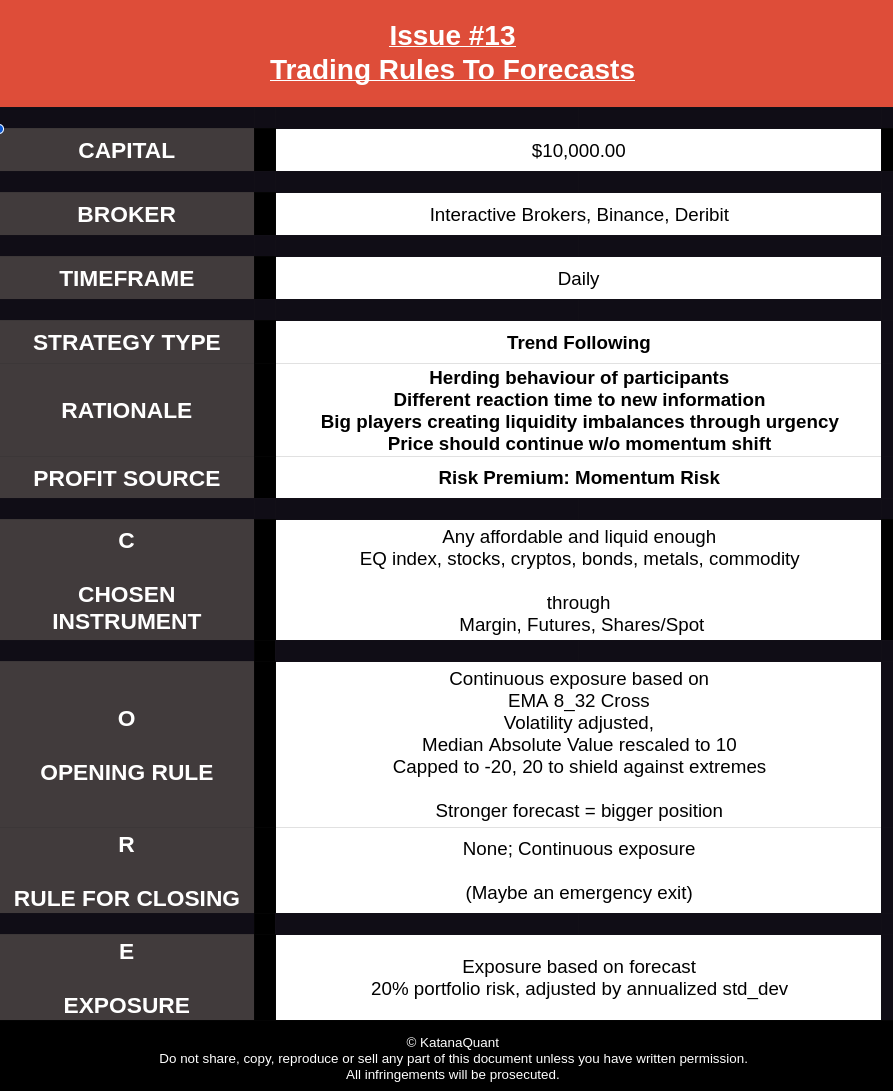

plt.savefig(f'{ſymbolname}_price_and_forecast.png', dpi=300)Updating Our C.O.R.E Recipe

We just made a whole lot of changes to our trading strategy so we should probably update our C.O.R.E. recipe accordingly:

Summary

Let's recap what we did here:

We calculated a raw forecast using the EMA8_32 crossover and then processed it further. We adjusted it for the instruments volatility to normalize it.

Then we calculated the scalar needed to change the forecasts absolute average (median) value to 10 throughout history.

Note that we didn't take a cross sectional median to normalize them across all instruments yet as Carver suggests in his blogpost. We're going to play around with that to see if we can improve the scalar in the next few issues.

For now we're going to keep things real simple so we can move forward in the development process quickly. We're working our way towards a real backtest so we can finally start researching. How much complexity you want to drop or include during your cycles is up to you. Just know, you can always come back later and change things.

After scaling the forecasts to an absolute average value of 10, we capped the maximum oscillations to -20 and 20 to shield us from extreme readings, which are more likely to occur at tops or bottoms.

This process helps us build generic forecasts that mean the same in each context. Generic values are way easier to work with systematically and help reduce the complexity at about every development stage.

This forecast is still not ready to be used for trading. You technically could, and a lot of people probably do it this way, but there's still room for a lot of improvements.

The full code of this article can be found in this weeks GitHub repo.

- Hōrōshi バガボンド

Disclaimer: The content and information provided by Vagabond Research, including all other materials, are for educational and informational purposes only and should not be considered financial advice or a recommendation to buy or sell any type of security or investment. Always conduct your own research and consult with a licensed financial professional before making investment decisions. Trading and investing can involve significant risk, and you should understand these risks before making any financial decisions.