A Dockerized Crypto Data Hub - Part II

Why You Should Index Your Database

Table of Contents

Recap

Why An Index Is Important

The Most Common Index: B-tree

Index Leaf Nnodes

The B-tree

Installing dbeaver & connecting to your db

Searching without index

The EXPLAIN statement

Creating the index

Searching with index

Joining without index

The gist of it

Recap

Last issue we utilized Docker and docker-compose to create a research data base and then seeded it with free historical EOD price data for 10k crypto tokens.

If you didn't already, you can check out the article here.

This week we're going to take a look at working with databases as a developer, specifically how to create and use an index.

Why An Index Is Important

If you already did some work with databases you probably have heard something along the lines of "SQL is inherently slow". While this might have been true in the past, it's definitely not true anymore. Still, developers often face performance issues when working with SQL databases.

Why does this happen?

SQL is a programming language that separates what data is needed from how to get it. Let's take a look at a very simple example of fetching all the exchanges you can trade BTC on from our database.

SELECT exchanges FROM coins WHERE symbol = 'BTC'The above SQL query only specifies what data you're interested in: the value of the column named exchanges which is stored in BTCs row in the table coins. We search for the table by matching its column named symbol. What the query doesn't specify is how the database should retrieve this information!

On the one hand this abstraction is good for developers because we don't have to think all that much about how to pull the data from our data store. We just need to tell it what data we want.

The query planner of the database will itself decide how to access that data by simulating different access techniques, scoring them and then executing the best performing one. If we dont set up our database in a way we can pull data efficiently, the only thing the optimizer can really do is going through all rows sequentially, one by one, until it finds what we need.

Right now we "only" have about 8 million rows of historical EOD spot crypto price data including volume and market cap. "Only" in hyphens because even though 8 million rows are basically nothing for a modern database, you will see that how exactly we're searching and accessing its data already makes quite the difference. Things will only get worse over time as we gradually add more data - new EOD crypto prices, other timeframes, different asset classes to diversify better, etc.

Since we're building this datahub for research, we want to be able to fetch data from it (and also execute all other steps) as fast as possible so we can iterate quickly.

This is where an index comes in! It works just like a phonebook. You only have to skim a few pages instead of the whole book for the data you're looking for after specifying an entry point like the first letter of someones lastname.

Let's take a closer look at how an index works.

The Most Common Index: B-tree

The B-tree (not binary tree!) is the most common SQL index and also happens to be the default in most databases to quickly access data. It supports equality and range querying and also works with sorting.

Since it's the default index in PostgreSQL we can create a B-tree index using

CREATE INDEX idx_coins_symbol on coins(symbol);The query planner will consider using the B-tree index whenever an indexed column is used for a comparison or any of these operators: BETWEEN, IN, IS NULL, IS NOT NULL and pattern matching anchored to the beginning col LIKE 'foo%' but not col LIKE '%bar'.

Creating an index doesn't change the tables data. It creates a new data structure that acts as a pointer and refers to the table.

Searching in a database index is like searching an ordered phonebook. Finding data in an ordered data set is faster because the sort order determines each entrys position.

Index Leaf Nodes

One of the drawbacks of using an index is that changing data via INSERT, DELETE, UPDATE becomes slower because it needs to update the index order immediately to prevent moving large amounts of data.

For this reason the database combines two different data structures to make accessing data faster while also keeping write operations sane: a doubly linked list and a search tree (the B-tree).

If you think of the B-tree as a literal tree, the doubly linked lists are the leafs hanging from its branches. They serve the purpose of establishing a logical order independent from the physical order in memory. The leafs keep a reference of their physical location, matching it to their logical order within this list.

Each entry knows about the previous and next logical entry. If we would only keep track of the physical order, each write operation would need to move large amounts of data to keep the phyiscal order updated, which is very time consuming.

By inserting new leaf nodes between two existing nodes, updating their logical links, we don't need to touch the physical order at all. We just need to move some pointers.

The B-tree

Since the leaf nodes are stored in an arbitrary order - like shuffling the pages of your phonebook - databases put a second structure on top to quickly find the entries again: a balanced search tree with root and branch nodes.

A branch layer is built up until all the leaf nodes are covered where each branch node stores the biggest values of their respective leaf nodes. On top of that is another layer, that stores the biggest values of their underlying branch nodes. This repeats until all biggest values fit into a single node, the root node.

If we search for the key 57, we traverse the tree in ascending order until a value is greater than or equal to 57. Since the tree is balanced - which just means the distance between each root and leaf node is the same everywhere - this search works almost instantly even on huge data sets.

Let's see it in action.

Installing dbeaver & connecting to our db

We're going to use dbeaver to fiddle around with our database for now. Ultimately we should've created the index in our init.sql file while seeding it. After we're done with taking a closer look at our index in action, we're going to add it to the file so it will get created automatically when we redeploy our datahub utilizing docker.

After installing dbeaver, we need to create a new connection for our database, specifically a PostgreSQL connection (elephant).

Select the elephant and click Next. If you're prompted to download the PostgreSQL driver, select Yes and continue. In the next menu you need to fill out some details for the connection. Fill the missing fields with the contents of your .env file so it matches our example and hit OK.

DB_USER=postgres

DB_PW=password

DB_DB=postgres

DB_PORT=5432You should now be able to connect to the database with dbeaver after starting the datahub with docker-compose up.

Searching Without Index

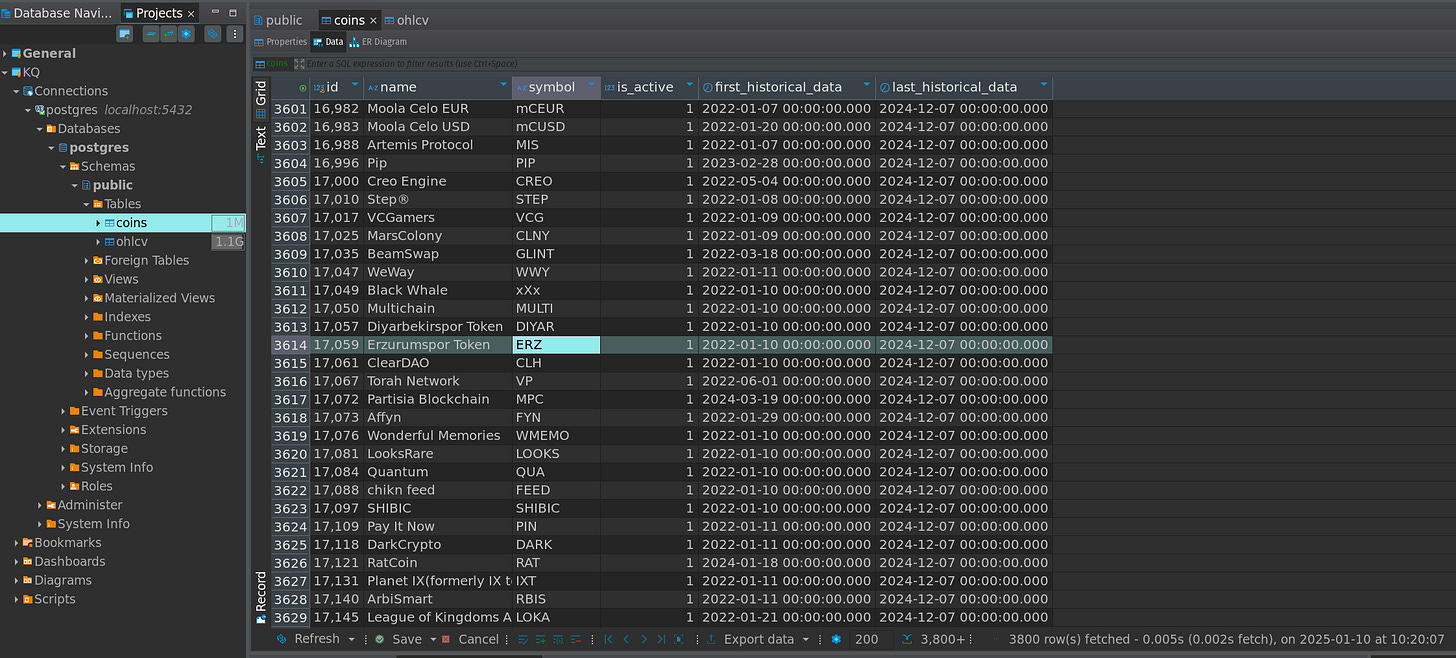

Let's pull down the information for a randomly selected coin: LOKA. To do this we need to make use of the WHERE clause. Navigate to the coins table by clicking through the connection: postgres -> Databases -> postgres -> Schemas -> public -> Tables -> coins.

CTRL + Click into the green tablename top left to open the SQL editor

and copy-paste the following SQL query in there before hitting the green play button.

SELECT * FROM coins where symbol ='LOKA';Sure enough we get some data:

The EXPLAIN Statement

Before the database executes any SQL query, its optimizer creates an execution plan for it. Its exact inner workings are not so important right now. It does so by calculating fictive cost amounts for different types of scans for the task at hand, and then comparing them to find the cheapest one.

PostgreSQL (>=9.2) has 4 types of index access mechanics:

The SEQ SCAN: Scans the entire table as it is stored on the disk step by step until it finds the correct entry.The INDEX SCAN: Performs a B-tree traversal, walking through the leaf nodes to find all matching entries, and fetching the data.The INDEX ONLY SCAN: Also performs a B-tree traversal but skips accessing the table because it can find selected columns in the index itself.The BITMAP INDEX / HEAP SCAN: Works like anINDEX SCANbut instead of fetching only one tuple-pointer fetches all tuple-pointers, sorts them via in-memory bitmap, and then visits the table tuples in physical order.

If there is no index present, the optimizer often has no choice but using a SEQ SCAN and scan the entire table to find the location of the requested data. If possible, we want to never use a SEQ SCAN.

To see which scan is being used for our SQL query, we can just ask the database by putting an EXPLAIN statement in front:

EXPLAIN SELECT * FROM coins where symbol ='LOKA';As you can see, we're utilizing a SEQ SCAN to access our data. This is because we don't have an index on the column we're searching in yet.

We can also add the ANALYZE keyword to take an even deeper look into the execution plan:

DISCLAIMER: The ANALYZE option executes the statement to record actual timing and row counts! This is mostly harmless for SELECT statements but it modifies your data when using it for INSERT, UPDATE or DELETE operations. To avoid the risk of accidentally modifying your data, you should enclose it in a transaction and perform a rollback afterwards.

EXPLAIN ANALYZE SELECT * FROM coins where symbol ='LOKA';We're scanning exactly 10,412 table rows in 0.847 ms to find LOKAs row. Is this bad? Well.. let's find out

Creating The Index

Right now, we're only interested in indexing the columns we're actually searching in. To create our index, we need to execute the following query:

CREATE INDEX idx_coins_symbol ON coins(symbol);Which indexes the entire column symbol on the table coins and stores it in idx_coins_symbol.

Searching With Index

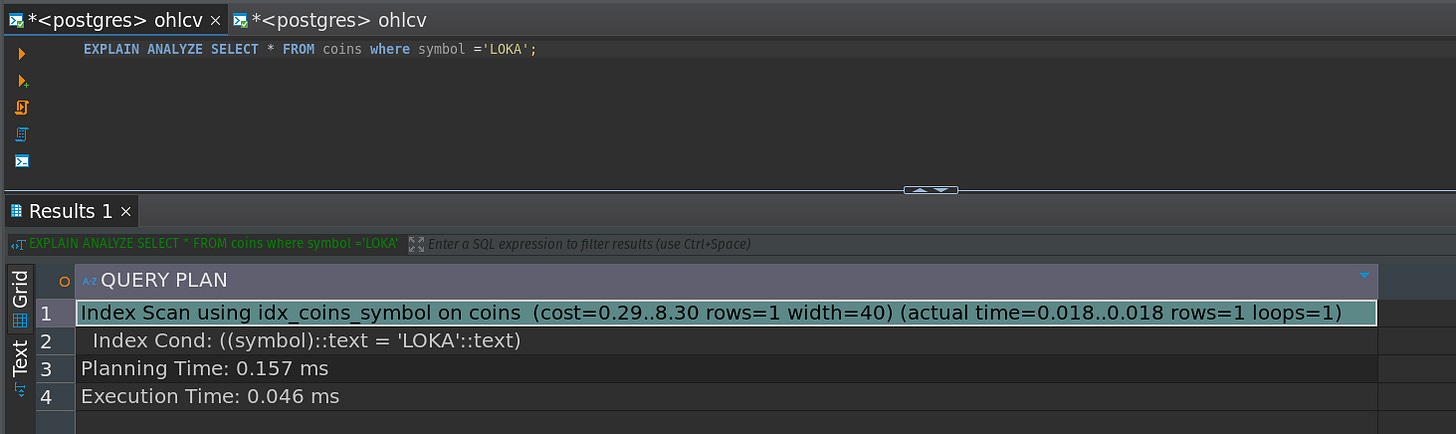

Let's have a look at the execution plan the database comes up with after we've created the index. Again using the EXPLAIN ANALYZE SELECT * FROM coins where symbol ='LOKA' query.

What changed?

Our execution time went down from 0.847 ms to 0.046 ms. Our query is about 18 times faster after adding the index (0.847 / 0.046 ~ 18.4). This is because the database was able to pinpoint the rows location without scanning the whole table to find it due to the B-tree index.

Now you might say, what's all the fuzz? This whole indexing thing sounds rather complicated and we only sped things up by 18x. Well.. yes, because the coins table has only 10K rows in total. Our SELECT query isn't really a real-world use case yet. It's kept simple to make the concept clear. Let's have a look at some more realistic way of accessing data.

But first remove the index:

DROP INDEX IF EXISTS idx_coins_symbol;Joining Without Index

A common - yet still not really representative - SQL operation for systematic traders is the fetching of all OHLCV rows for a given coin. For example to render its price history as a candlestick chart. Since we've linked the tables to one another using a FOREIGN KEY on the coin_id of the coins table, we need to utilize something like the JOIN Clause or chaining two SELECTs where we wirst find the coins id and then search for it in the ohlcv table. Using the JOIN clause is almost always superior because it gets executed in one go. The two SELECT statements would make two round-trips to the database, introducing latency issues, which are very nasty to deal with at scale.

SELECT ohlcv.*

FROM ohlcv

JOIN coins ON ohlcv.coin_id = coins.id

WHERE coins.symbol = 'LOKA';It's execution plan looks like this:

It takes us 427.8 ms to get the data. The optimizer used a bunch of different methods to filter for it but again relied heavily on SEQ SCAN due to a missing index. Let's see how things change when we use an index:

Joining With Index

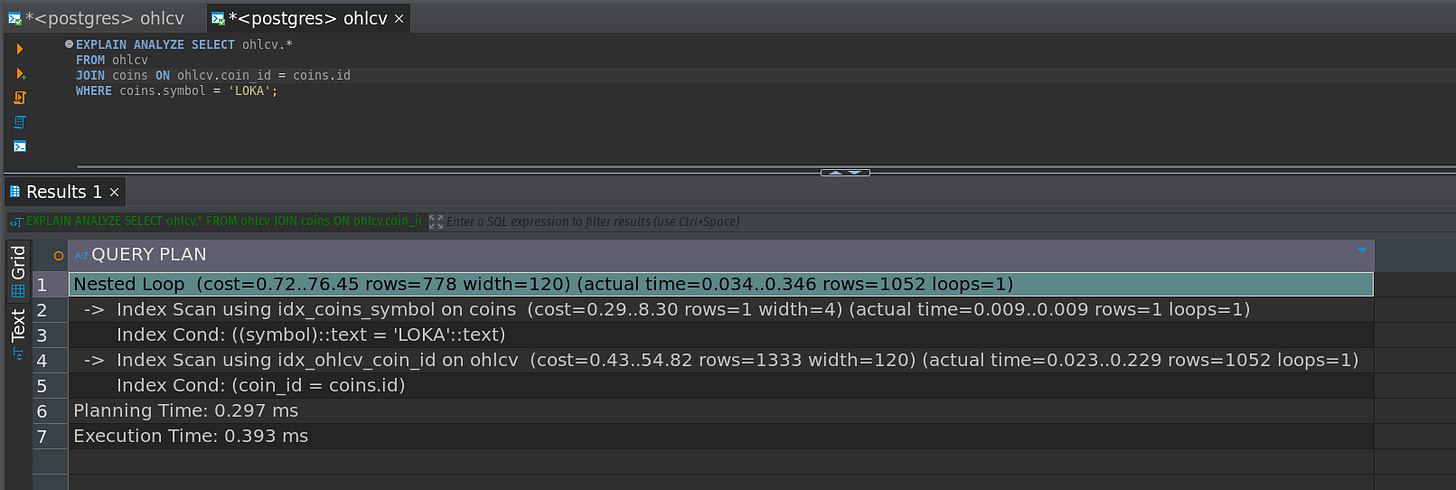

If we want to create an index for columns we're searching for, what columns do we need to consider? We're kind of accessing two different tables via two different columns here. First we scan the symbol column in the coins table to find the coins id, which we then use to find its corresponding rows in the ohlcv table. So at the very least, we should index the symbol column in the coins table.

CREATE INDEX idx_coins_symbol ON coins(symbol);This only midly sped up things. We're getting our coin id quicker but as we saw, this was only an improvement of 18x on the 10K rows. The planner is still not sure where to look for in the ohlcv table.

Let's put an index on the coin_id column in the ohlcv table too!

CREATE INDEX idx_ohlcv_coin_id ON ohlcv(coin_id);The planner is now able to use and INDEX SCAN on both tables, which drastically decreases execution time from 427 ms down to 0.393 ms, a 1000x improvement.

The gist of it

Why do we gain a 1000x improvement all of a sudden, the first example only sped up things by 18x?

Because the first example was just a SELECT on a super tiny table. 10K rows is basically nothing. There's only so much performance improvement to be had for this small amount of data.

If we search in bigger tables or combine multiple tables, things get more spicy. Sifting through 10K rows one by one is a whole different story than doing the same on 10 million rows or more.

For now, every time we want to search in a column, we should put an index on it. As the datahub and its requirements grow, we're going to face different scenarios and will adapt to them by choosing the right indexing and accessing techniques.

To round this week of, simply include both b-trees in the sql seed file.

CREATE INDEX idx_coins_symbol ON coins(symbol);

CREATE INDEX idx_ohlcv_coin_id ON ohlcv(coin_id);- Hōrōshi バガボンド

Disclaimer: The content and information provided by Vagabond Research, including all other materials, are for educational and informational purposes only and should not be considered financial advice or a recommendation to buy or sell any type of security or investment. Always conduct your own research and consult with a licensed financial professional before making investment decisions. Trading and investing can involve significant risk, and you should understand these risks before making any financial decisions